Bitbucket Runners on K8s

Atlassian 在 2021年 9月宣布可以用於 bitbucket pipeline 的自託管Runners ?

Background

公司內部主要使用Bitbucket cloud 作為代碼託管平台,前陣子被assign了任務~目標是要省錢,因此survey了bitbucket runner,並將它實踐上production。

Introduction

Bitbucket runner 分為兩種級別:

repo runner- 僅該repo 可使用workspace runner- 所有workspace的repo 可以一起使用

Pros:

- 可以訪問內網的數據庫 - 將runner 埋在自己的環境中訪問自己數據庫為pipeline使用,這是相對使用cloud pipeline來說更為安全的做法

- 擁有更大的資源 - cloud pipeline 開的機器有限制,如果需要更大的資源可以使用runner來完成

How to run?

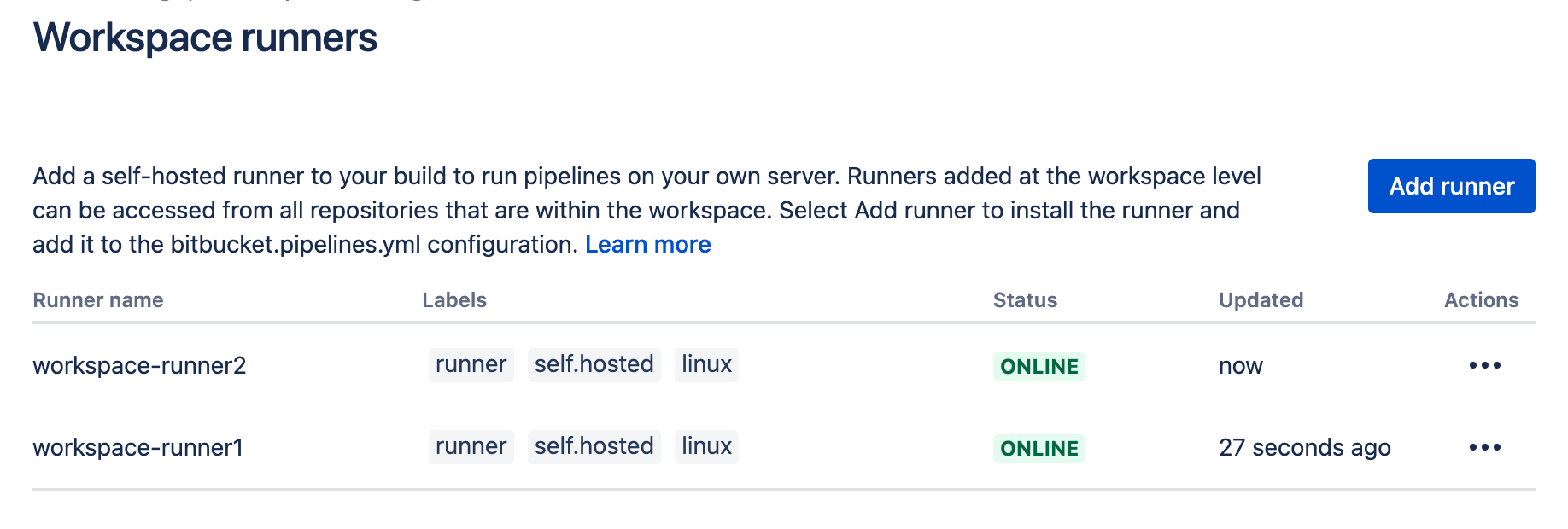

以workspace level runner來說:

Step1: 訪問下面的網址,其中將workspace_name 替換成自己的workspace。

https://bitbucket.org/<workspace_name>/workspace/settings/addon/admin/pipelines/account-runners

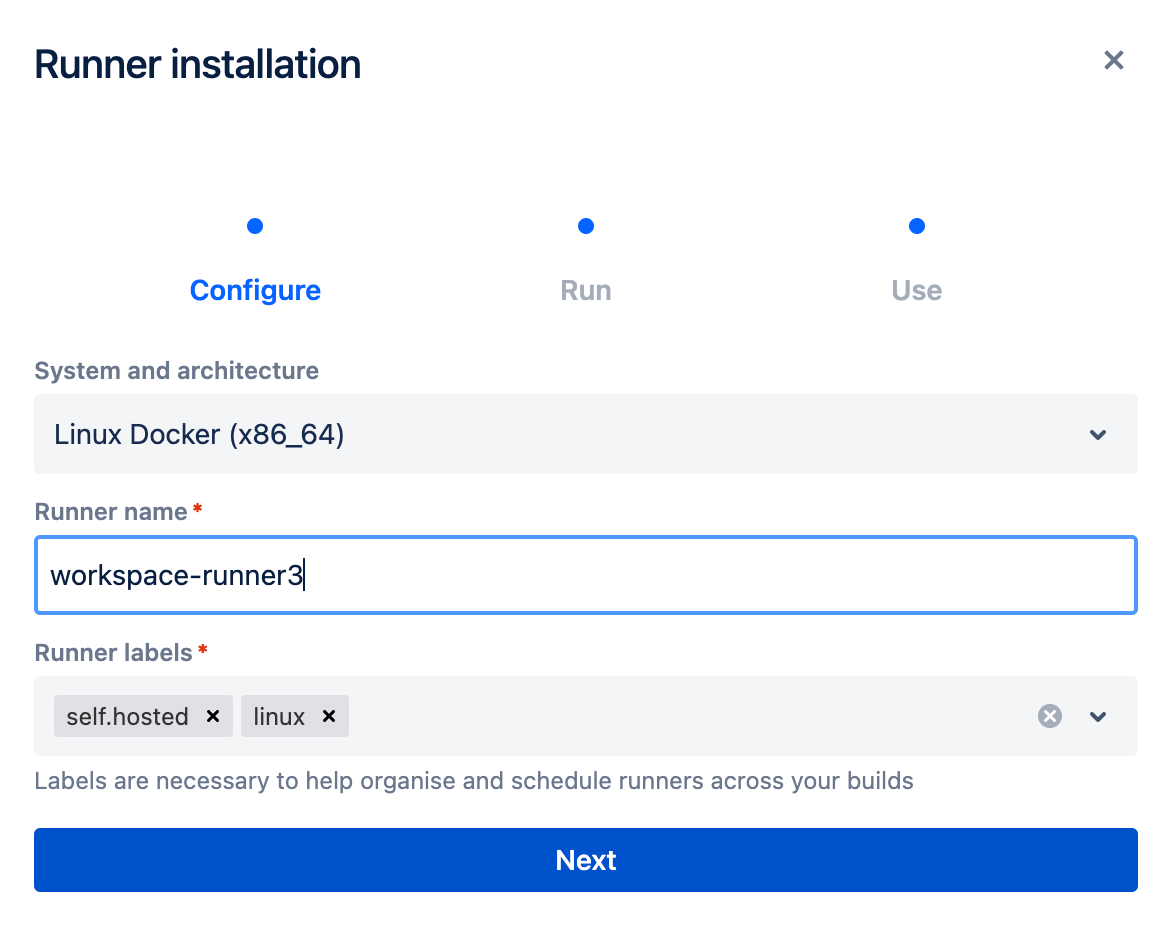

Step2: 按下Add runner,並幫他取個名字,然後按下next

Step3: 會出現以下的字串,請妥善保留這些字串

-> ACCOUNT_UUID RUNNER_UUID OAUTH_CLIENT_ID OAUTH_CLIENT_SECRET

# copy this command with the token to run on the command line

docker container run -it -v /tmp:/tmp -v /var/run/docker.sock:/var/run/docker.sock -v /var/lib/docker/containers:/var/lib/docker/containers:ro \

-e ACCOUNT_UUID={__ACCOUNT_UUID__} \

-e RUNNER_UUID={__RUNNER_UUID__} \

-e RUNTIME_PREREQUISITES_ENABLED=true \

-e OAUTH_CLIENT_ID={__OAUTH_CLIENT_ID__} \

-e OAUTH_CLIENT_SECRET={__OAUTH_CLIENT_SECRET__} \

-e WORKING_DIRECTORY=/tmp \

--name runner-df17339e-7684-5bbf-b268-dc57b2c5a58d \

docker-public.packages.atlassian.com/sox/atlassian/bitbucket-pipelines-runner:1

Step4: Deploy on k8s

apiVersion: v1

kind: Secret

metadata:

name: runner-oauth-credentials

stringData:

oauthClientId: ${OAUTH_CLIENT_ID}

oauthClientSecret: ${OAUTH_CLIENT_SECRET}apiVersion: batch/v1

kind: Job

metadata:

name: runner

spec:

template:

metadata:

labels:

accountUuid: ${ACCOUNT_UUID}

runnerUuid: ${RUNNER_UUID}

spec:

containers:

- name: bitbucket-k8s-runner

image: docker-public.packages.atlassian.com/sox/atlassian/bitbucket-pipelines-runner

env:

- name: ACCOUNT_UUID

value: "{${ACCOUNT_UUID}}"

- name: RUNNER_UUID

value: "{${RUNNER_UUID}}"

- name: OAUTH_CLIENT_ID

valueFrom:

secretKeyRef:

name: runner-oauth-credentials

key: oauthClientId

- name: OAUTH_CLIENT_SECRET

valueFrom:

secretKeyRef:

name: runner-oauth-credentials

key: oauthClientSecret

- name: WORKING_DIRECTORY

value: "/tmp"

volumeMounts:

- name: tmp

mountPath: /tmp

- name: docker-containers

mountPath: /var/lib/docker/containers

readOnly: true

- name: var-run

mountPath: /var/run

- name: docker-in-docker

image: docker:20.10.7-dind

securityContext:

privileged: true

volumeMounts:

- name: tmp

mountPath: /tmp

- name: docker-containers

mountPath: /var/lib/docker/containers

- name: var-run

mountPath: /var/run

restartPolicy: OnFailure

volumes:

- name: tmp

- name: docker-containers

- name: var-run

backoffLimit: 6

completions: 1

parallelism: 1# create namespace

kubectl create namespace bitbucket-runner

# apply yaml

kubectl -n bitbucket-runner apply -f secrets.yaml

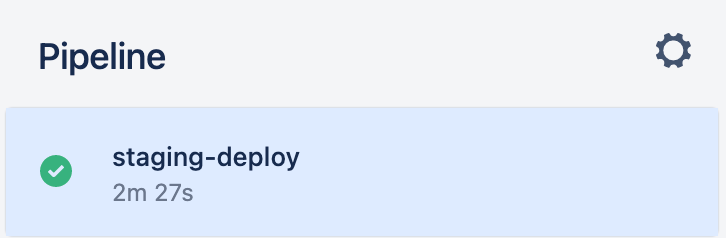

kubectl -n bitbucket-runner apply -f job.yaml順利的話會看到bitbucket runner 處於running的狀態?

Use runner on pipeline

只要在需要的步驟給這個標籤即可

runs-on:

- 'self.hosted'bitbucket-pipelines.yml:

pipelines:

custom:

pipeline:

- step:

name: Step1

# default: 4gb, 2x: 8GB, 4x: 16GB, 8x: 32gb

size: 8x

runs-on:

- 'self.hosted'

- 'my.custom.label'

script:

- echo "This step will run on a self hosted runner with 32 GB of memory.";

- step:

name: Step2

script:

- echo "This step will run on Atlassian's infrastructure as usual.";恭喜你成功使用pipeline runner ?

Cost Analysis

Object: 可以節約pipeline時數,降低成本

runner 建立在spot instance上,並配上scale in/out,在非上班時間把runner關掉做到節約成本的效益~

pipeline計費 100min 1 美元 、spot instance 一台一小時約 0.066美金(6.6min)(依照我們的使用場景)

綜合計算之下,使用runner對我們來說是相對節省的

Deploy to EKS

我並未照著官方指示去做deploy,根據我自身的需求,魔改了蠻多部分的?

使用的工具:

- eks v1.21

- gomplate v3.11.1

- keda v2.7

- karpenter v0.18.0

首先,我使用gomplate這工具來實作render yaml的部分,先將相關變數抽出來變成配置檔。

repo-runner1:

ACCOUNT_UUID: "{ACCOUNT_UUID}"

REPOSITORY_UUID: "{REPOSITORY_UUID}"

RUNNER_UUID: "{RUNNER_UUID}"

OAUTH_CLIENT_ID: "OAUTH_CLIENT_ID"

OAUTH_CLIENT_SECRET: "OAUTH_CLIENT_SECRET"

SCALE: false

DOUBLERESOURCE: false再來是製作template檔案:

Service account: 需要綁一個iam role 去做aws public ecr登入的動作避免踩到rate limitDeployment: 將原本Job修改為Deployment,這也是可以沿用舊的但我比較熟悉使用Deployment,而且官方提供一個永不死亡的job,覺得很奇怪XD.spec.template.spec.nodeselector: 選擇karpenter產生的機器.spec.template.spec.affinity.podAntiAffinity: 指定pod不要全部擠在同一個機器.spec.template.spec.initContainer: 拿到登入public ecr 的key.spec.template.spec.containers.0.lifecycle.preStop: 這個container是runner的,做了一些實驗發現要砍掉內部的java程序,在cloud上才會顯示offline,不然就是要等到這個runner被assign task時才會發現他掛掉了.spec.template.spec.containers.1.lifecycle.postStart: 這個container是dind,在container被啟動後做一個ecr登入的指令,避免觸發到ratelimit

ScaledObject: 這是安裝keda的crd,我使用cron這個scaler來做scale in的動作

karpenter & keda的部分就不多著墨,之後有機會再寫一篇分享~

---

apiVersion: v1

kind: ServiceAccount

metadata:

annotations:

eks.amazonaws.com/role-arn: iam_arn

name: runner

{{ range $key, $value :=(ds "env_config") -}}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ $key }}

spec:

replicas: 1

selector:

matchLabels:

app: {{ $key }}

template:

metadata:

labels:

app: {{ $key }}

schedule: runner

spec:

serviceAccountName: runner

{{ if $value.DOUBLERESOURCE -}}

nodeSelector:

karpenter.sh/provisioner-name: runner2x

{{ else -}}

nodeSelector:

karpenter.sh/provisioner-name: runner

{{ end -}}

tolerations:

- key: "runner"

operator: "Exists"

effect: "NoSchedule"

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchLabels:

schedule: runner

topologyKey: kubernetes.io/hostname

initContainers:

- name: aws-cli

image: public.ecr.aws/bitnami/aws-cli:2.8.2

command: ['sh', '-c', "aws ecr-public get-login-password --region us-east-1 > /tmp/aws"]

securityContext:

runAsUser: 0

volumeMounts:

- name: tmp

mountPath: /tmp

containers:

- name: {{ $key }}

image: docker-public.packages.atlassian.com/sox/atlassian/bitbucket-pipelines-runner

resources:

requests:

memory: "0.5Gi"

cpu: "0.5"

lifecycle:

preStop:

exec:

command:

- /bin/sh

- -c

- kill $(ps aux | grep java | grep -v grep| awk '{print $1}')

livenessProbe:

exec:

command:

- /bin/sh

- -c

- grep "Updating runner state" /tmp/*/runner.log | tail -n 1 | grep ONLINE

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 20

successThreshold: 1

timeoutSeconds: 1

env:

- name: ACCOUNT_UUID

value: "{{ $value.ACCOUNT_UUID }}"

- name: REPOSITORY_UUID

value: "{{ $value.REPOSITORY_UUID }}"

- name: RUNNER_UUID

value: "{{ $value.RUNNER_UUID }}"

- name: OAUTH_CLIENT_ID

value: "{{ $value.OAUTH_CLIENT_ID }}"

- name: OAUTH_CLIENT_SECRET

value: "{{ $value.OAUTH_CLIENT_SECRET }}"

- name: WORKING_DIRECTORY

value: "/tmp"

volumeMounts:

- name: tmp

mountPath: /tmp

- name: docker-containers

mountPath: /var/lib/docker/containers

readOnly: true

- name: var-run

mountPath: /var/run

- name: {{ $key }}-docker-in-docker

image: docker:20.10.5-dind

resources:

requests:

memory: "2Gi"

cpu: "1"

securityContext:

privileged: true

lifecycle:

postStart:

exec:

command:

- /bin/sh

- -c

- sleep 10; cat /tmp/aws | docker login --username AWS --password-stdin <public ecr 位置>

volumeMounts:

- name: tmp

mountPath: /tmp

- name: docker-containers

mountPath: /var/lib/docker/containers

- name: var-run

mountPath: /var/run

restartPolicy: Always

volumes:

- name: tmp

- name: docker-containers

- name: var-run

{{ if $value.SCALE}}

---

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: {{ $key }}

spec:

maxReplicaCount: 1

cooldownPeriod: 10

scaleTargetRef:

name: {{ $key }}

triggers:

- type: cron

metadata:

timezone: Asia/Taipei

start: 0 8 * * 1-5

end: 0 22 * * 1-5

desiredReplicas: "1"

{{ end -}}

{{ end -}}最後執行 template render and apply

Possible Issues

- Status 500

如果有按照上方的yaml去做apply的話應該是不會遇到這個問題,詳細的原因可以參考這個issue,我遇到的問題是跟k8s的打分機制有關,但網上也有看到升級containerd/docker版本就能解決。依我的狀況來說,最簡單的解決方式就是記得設定pod cpu/mem request。

Status 500: {"message":"io.containerd.runc.v2: failed to adjust OOM score for shim: set shim OOM score: write /proc/703/oom_score_adj: invalid argument\n: exit status 1: unknown"}2. ruby天坑

公司有使用ruby,在上runner跑ci時有遇到這個問題,除錯除很久,後來才發現是cpu 的問題,相關issue。解決方式:換到最新的intel cpu

bundle exec rspec --exclude-pattern "spec/{swagger}/**/*_spec.rb"

/opt/atlassian/pipelines/agent/build/vendor/bundle/ruby/2.5.0/gems/ffi-1.11.1/lib/ffi/library.rb:112: [BUG] Illegal instruction at 0x00007f92d26d5025Further reading

- It’s official! Announcing Runners in Bitbucket Pipelines

- Configure your runner in bitbucket-pipelines.yml

Future plan

偶然看到bitbucket有推出runner controller可以auto scale out/in runner數量,之後會再找時間將它實踐上prod。